| // Copyright 2013 The Flutter Authors. All rights reserved. |

| // Use of this source code is governed by a BSD-style license that can be |

| // found in the LICENSE file. |

| part of dart.ui; |

| |

| // Examples can assume: |

| // // (for the example in Color) |

| // // ignore_for_file: use_full_hex_values_for_flutter_colors |

| // late ui.Image _image; |

| // dynamic _cacheImage(dynamic _, [dynamic __]) { } |

| // dynamic _drawImage(dynamic _, [dynamic __]) { } |

| |

| // Some methods in this file assert that their arguments are not null. These |

| // asserts are just to improve the error messages; they should only cover |

| // arguments that are either dereferenced _in Dart_, before being passed to the |

| // engine, or that the engine explicitly null-checks itself (after attempting to |

| // convert the argument to a native type). It should not be possible for a null |

| // or invalid value to be used by the engine even in release mode, since that |

| // would cause a crash. It is, however, acceptable for error messages to be much |

| // less useful or correct in release mode than in debug mode. |

| // |

| // Painting APIs will also warn about arguments representing NaN coordinates, |

| // which can not be rendered by Skia. |

| |

| bool _rectIsValid(Rect rect) { |

| assert(!rect.hasNaN, 'Rect argument contained a NaN value.'); |

| return true; |

| } |

| |

| bool _rrectIsValid(RRect rrect) { |

| assert(!rrect.hasNaN, 'RRect argument contained a NaN value.'); |

| return true; |

| } |

| |

| bool _offsetIsValid(Offset offset) { |

| assert(!offset.dx.isNaN && !offset.dy.isNaN, 'Offset argument contained a NaN value.'); |

| return true; |

| } |

| |

| bool _matrix4IsValid(Float64List matrix4) { |

| assert(matrix4.length == 16, 'Matrix4 must have 16 entries.'); |

| assert(matrix4.every((double value) => value.isFinite), 'Matrix4 entries must be finite.'); |

| return true; |

| } |

| |

| bool _radiusIsValid(Radius radius) { |

| assert(!radius.x.isNaN && !radius.y.isNaN, 'Radius argument contained a NaN value.'); |

| return true; |

| } |

| |

| Color _scaleAlpha(Color a, double factor) { |

| return a.withAlpha((a.alpha * factor).round().clamp(0, 255)); |

| } |

| |

| /// An immutable 32 bit color value in ARGB format. |

| /// |

| /// Consider the light teal of the Flutter logo. It is fully opaque, with a red |

| /// channel value of 0x42 (66), a green channel value of 0xA5 (165), and a blue |

| /// channel value of 0xF5 (245). In the common "hash syntax" for color values, |

| /// it would be described as `#42A5F5`. |

| /// |

| /// Here are some ways it could be constructed: |

| /// |

| /// ```dart |

| /// Color c1 = const Color(0xFF42A5F5); |

| /// Color c2 = const Color.fromARGB(0xFF, 0x42, 0xA5, 0xF5); |

| /// Color c3 = const Color.fromARGB(255, 66, 165, 245); |

| /// Color c4 = const Color.fromRGBO(66, 165, 245, 1.0); |

| /// ``` |

| /// |

| /// If you are having a problem with `Color` wherein it seems your color is just |

| /// not painting, check to make sure you are specifying the full 8 hexadecimal |

| /// digits. If you only specify six, then the leading two digits are assumed to |

| /// be zero, which means fully-transparent: |

| /// |

| /// ```dart |

| /// Color c1 = const Color(0xFFFFFF); // fully transparent white (invisible) |

| /// Color c2 = const Color(0xFFFFFFFF); // fully opaque white (visible) |

| /// ``` |

| /// |

| /// See also: |

| /// |

| /// * [Colors](https://api.flutter.dev/flutter/material/Colors-class.html), which |

| /// defines the colors found in the Material Design specification. |

| class Color { |

| /// Construct a color from the lower 32 bits of an [int]. |

| /// |

| /// The bits are interpreted as follows: |

| /// |

| /// * Bits 24-31 are the alpha value. |

| /// * Bits 16-23 are the red value. |

| /// * Bits 8-15 are the green value. |

| /// * Bits 0-7 are the blue value. |

| /// |

| /// In other words, if AA is the alpha value in hex, RR the red value in hex, |

| /// GG the green value in hex, and BB the blue value in hex, a color can be |

| /// expressed as `const Color(0xAARRGGBB)`. |

| /// |

| /// For example, to get a fully opaque orange, you would use `const |

| /// Color(0xFFFF9000)` (`FF` for the alpha, `FF` for the red, `90` for the |

| /// green, and `00` for the blue). |

| const Color(int value) : value = value & 0xFFFFFFFF; |

| |

| /// Construct a color from the lower 8 bits of four integers. |

| /// |

| /// * `a` is the alpha value, with 0 being transparent and 255 being fully |

| /// opaque. |

| /// * `r` is [red], from 0 to 255. |

| /// * `g` is [green], from 0 to 255. |

| /// * `b` is [blue], from 0 to 255. |

| /// |

| /// Out of range values are brought into range using modulo 255. |

| /// |

| /// See also [fromRGBO], which takes the alpha value as a floating point |

| /// value. |

| const Color.fromARGB(int a, int r, int g, int b) : |

| value = (((a & 0xff) << 24) | |

| ((r & 0xff) << 16) | |

| ((g & 0xff) << 8) | |

| ((b & 0xff) << 0)) & 0xFFFFFFFF; |

| |

| /// Create a color from red, green, blue, and opacity, similar to `rgba()` in CSS. |

| /// |

| /// * `r` is [red], from 0 to 255. |

| /// * `g` is [green], from 0 to 255. |

| /// * `b` is [blue], from 0 to 255. |

| /// * `opacity` is alpha channel of this color as a double, with 0.0 being |

| /// transparent and 1.0 being fully opaque. |

| /// |

| /// Out of range values are brought into range using modulo 255. |

| /// |

| /// See also [fromARGB], which takes the opacity as an integer value. |

| const Color.fromRGBO(int r, int g, int b, double opacity) : |

| value = ((((opacity * 0xff ~/ 1) & 0xff) << 24) | |

| ((r & 0xff) << 16) | |

| ((g & 0xff) << 8) | |

| ((b & 0xff) << 0)) & 0xFFFFFFFF; |

| |

| /// A 32 bit value representing this color. |

| /// |

| /// The bits are assigned as follows: |

| /// |

| /// * Bits 24-31 are the alpha value. |

| /// * Bits 16-23 are the red value. |

| /// * Bits 8-15 are the green value. |

| /// * Bits 0-7 are the blue value. |

| final int value; |

| |

| /// The alpha channel of this color in an 8 bit value. |

| /// |

| /// A value of 0 means this color is fully transparent. A value of 255 means |

| /// this color is fully opaque. |

| int get alpha => (0xff000000 & value) >> 24; |

| |

| /// The alpha channel of this color as a double. |

| /// |

| /// A value of 0.0 means this color is fully transparent. A value of 1.0 means |

| /// this color is fully opaque. |

| double get opacity => alpha / 0xFF; |

| |

| /// The red channel of this color in an 8 bit value. |

| int get red => (0x00ff0000 & value) >> 16; |

| |

| /// The green channel of this color in an 8 bit value. |

| int get green => (0x0000ff00 & value) >> 8; |

| |

| /// The blue channel of this color in an 8 bit value. |

| int get blue => (0x000000ff & value) >> 0; |

| |

| /// Returns a new color that matches this color with the alpha channel |

| /// replaced with `a` (which ranges from 0 to 255). |

| /// |

| /// Out of range values will have unexpected effects. |

| Color withAlpha(int a) { |

| return Color.fromARGB(a, red, green, blue); |

| } |

| |

| /// Returns a new color that matches this color with the alpha channel |

| /// replaced with the given `opacity` (which ranges from 0.0 to 1.0). |

| /// |

| /// Out of range values will have unexpected effects. |

| Color withOpacity(double opacity) { |

| assert(opacity >= 0.0 && opacity <= 1.0); |

| return withAlpha((255.0 * opacity).round()); |

| } |

| |

| /// Returns a new color that matches this color with the red channel replaced |

| /// with `r` (which ranges from 0 to 255). |

| /// |

| /// Out of range values will have unexpected effects. |

| Color withRed(int r) { |

| return Color.fromARGB(alpha, r, green, blue); |

| } |

| |

| /// Returns a new color that matches this color with the green channel |

| /// replaced with `g` (which ranges from 0 to 255). |

| /// |

| /// Out of range values will have unexpected effects. |

| Color withGreen(int g) { |

| return Color.fromARGB(alpha, red, g, blue); |

| } |

| |

| /// Returns a new color that matches this color with the blue channel replaced |

| /// with `b` (which ranges from 0 to 255). |

| /// |

| /// Out of range values will have unexpected effects. |

| Color withBlue(int b) { |

| return Color.fromARGB(alpha, red, green, b); |

| } |

| |

| // See <https://www.w3.org/TR/WCAG20/#relativeluminancedef> |

| static double _linearizeColorComponent(double component) { |

| if (component <= 0.03928) { |

| return component / 12.92; |

| } |

| return math.pow((component + 0.055) / 1.055, 2.4) as double; |

| } |

| |

| /// Returns a brightness value between 0 for darkest and 1 for lightest. |

| /// |

| /// Represents the relative luminance of the color. This value is computationally |

| /// expensive to calculate. |

| /// |

| /// See <https://en.wikipedia.org/wiki/Relative_luminance>. |

| double computeLuminance() { |

| // See <https://www.w3.org/TR/WCAG20/#relativeluminancedef> |

| final double R = _linearizeColorComponent(red / 0xFF); |

| final double G = _linearizeColorComponent(green / 0xFF); |

| final double B = _linearizeColorComponent(blue / 0xFF); |

| return 0.2126 * R + 0.7152 * G + 0.0722 * B; |

| } |

| |

| /// Linearly interpolate between two colors. |

| /// |

| /// This is intended to be fast but as a result may be ugly. Consider |

| /// [HSVColor] or writing custom logic for interpolating colors. |

| /// |

| /// If either color is null, this function linearly interpolates from a |

| /// transparent instance of the other color. This is usually preferable to |

| /// interpolating from [material.Colors.transparent] (`const |

| /// Color(0x00000000)`), which is specifically transparent _black_. |

| /// |

| /// The `t` argument represents position on the timeline, with 0.0 meaning |

| /// that the interpolation has not started, returning `a` (or something |

| /// equivalent to `a`), 1.0 meaning that the interpolation has finished, |

| /// returning `b` (or something equivalent to `b`), and values in between |

| /// meaning that the interpolation is at the relevant point on the timeline |

| /// between `a` and `b`. The interpolation can be extrapolated beyond 0.0 and |

| /// 1.0, so negative values and values greater than 1.0 are valid (and can |

| /// easily be generated by curves such as [Curves.elasticInOut]). Each channel |

| /// will be clamped to the range 0 to 255. |

| /// |

| /// Values for `t` are usually obtained from an [Animation<double>], such as |

| /// an [AnimationController]. |

| static Color? lerp(Color? a, Color? b, double t) { |

| if (b == null) { |

| if (a == null) { |

| return null; |

| } else { |

| return _scaleAlpha(a, 1.0 - t); |

| } |

| } else { |

| if (a == null) { |

| return _scaleAlpha(b, t); |

| } else { |

| return Color.fromARGB( |

| _clampInt(_lerpInt(a.alpha, b.alpha, t).toInt(), 0, 255), |

| _clampInt(_lerpInt(a.red, b.red, t).toInt(), 0, 255), |

| _clampInt(_lerpInt(a.green, b.green, t).toInt(), 0, 255), |

| _clampInt(_lerpInt(a.blue, b.blue, t).toInt(), 0, 255), |

| ); |

| } |

| } |

| } |

| |

| /// Combine the foreground color as a transparent color over top |

| /// of a background color, and return the resulting combined color. |

| /// |

| /// This uses standard alpha blending ("SRC over DST") rules to produce a |

| /// blended color from two colors. This can be used as a performance |

| /// enhancement when trying to avoid needless alpha blending compositing |

| /// operations for two things that are solid colors with the same shape, but |

| /// overlay each other: instead, just paint one with the combined color. |

| static Color alphaBlend(Color foreground, Color background) { |

| final int alpha = foreground.alpha; |

| if (alpha == 0x00) { // Foreground completely transparent. |

| return background; |

| } |

| final int invAlpha = 0xff - alpha; |

| int backAlpha = background.alpha; |

| if (backAlpha == 0xff) { // Opaque background case |

| return Color.fromARGB( |

| 0xff, |

| (alpha * foreground.red + invAlpha * background.red) ~/ 0xff, |

| (alpha * foreground.green + invAlpha * background.green) ~/ 0xff, |

| (alpha * foreground.blue + invAlpha * background.blue) ~/ 0xff, |

| ); |

| } else { // General case |

| backAlpha = (backAlpha * invAlpha) ~/ 0xff; |

| final int outAlpha = alpha + backAlpha; |

| assert(outAlpha != 0x00); |

| return Color.fromARGB( |

| outAlpha, |

| (foreground.red * alpha + background.red * backAlpha) ~/ outAlpha, |

| (foreground.green * alpha + background.green * backAlpha) ~/ outAlpha, |

| (foreground.blue * alpha + background.blue * backAlpha) ~/ outAlpha, |

| ); |

| } |

| } |

| |

| /// Returns an alpha value representative of the provided [opacity] value. |

| /// |

| /// The [opacity] value may not be null. |

| static int getAlphaFromOpacity(double opacity) { |

| return (clampDouble(opacity, 0.0, 1.0) * 255).round(); |

| } |

| |

| @override |

| bool operator ==(Object other) { |

| if (identical(this, other)) { |

| return true; |

| } |

| if (other.runtimeType != runtimeType) { |

| return false; |

| } |

| return other is Color |

| && other.value == value; |

| } |

| |

| @override |

| int get hashCode => value.hashCode; |

| |

| @override |

| String toString() => 'Color(0x${value.toRadixString(16).padLeft(8, '0')})'; |

| } |

| |

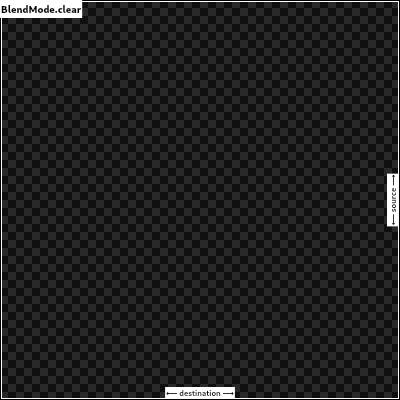

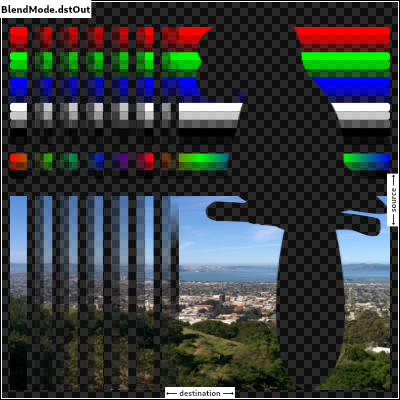

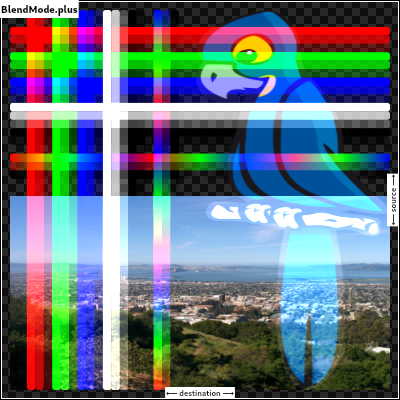

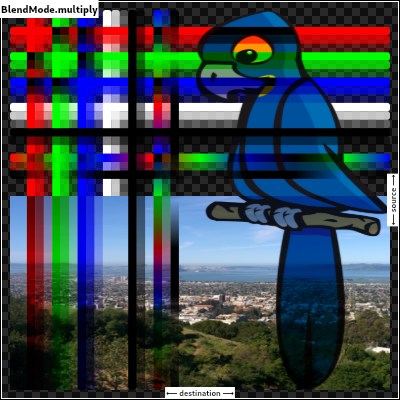

| /// Algorithms to use when painting on the canvas. |

| /// |

| /// When drawing a shape or image onto a canvas, different algorithms can be |

| /// used to blend the pixels. The different values of [BlendMode] specify |

| /// different such algorithms. |

| /// |

| /// Each algorithm has two inputs, the _source_, which is the image being drawn, |

| /// and the _destination_, which is the image into which the source image is |

| /// being composited. The destination is often thought of as the _background_. |

| /// The source and destination both have four color channels, the red, green, |

| /// blue, and alpha channels. These are typically represented as numbers in the |

| /// range 0.0 to 1.0. The output of the algorithm also has these same four |

| /// channels, with values computed from the source and destination. |

| /// |

| /// The documentation of each value below describes how the algorithm works. In |

| /// each case, an image shows the output of blending a source image with a |

| /// destination image. In the images below, the destination is represented by an |

| /// image with horizontal lines and an opaque landscape photograph, and the |

| /// source is represented by an image with vertical lines (the same lines but |

| /// rotated) and a bird clip-art image. The [src] mode shows only the source |

| /// image, and the [dst] mode shows only the destination image. In the |

| /// documentation below, the transparency is illustrated by a checkerboard |

| /// pattern. The [clear] mode drops both the source and destination, resulting |

| /// in an output that is entirely transparent (illustrated by a solid |

| /// checkerboard pattern). |

| /// |

| /// The horizontal and vertical bars in these images show the red, green, and |

| /// blue channels with varying opacity levels, then all three color channels |

| /// together with those same varying opacity levels, then all three color |

| /// channels set to zero with those varying opacity levels, then two bars showing |

| /// a red/green/blue repeating gradient, the first with full opacity and the |

| /// second with partial opacity, and finally a bar with the three color channels |

| /// set to zero but the opacity varying in a repeating gradient. |

| /// |

| /// ## Application to the [Canvas] API |

| /// |

| /// When using [Canvas.saveLayer] and [Canvas.restore], the blend mode of the |

| /// [Paint] given to the [Canvas.saveLayer] will be applied when |

| /// [Canvas.restore] is called. Each call to [Canvas.saveLayer] introduces a new |

| /// layer onto which shapes and images are painted; when [Canvas.restore] is |

| /// called, that layer is then composited onto the parent layer, with the source |

| /// being the most-recently-drawn shapes and images, and the destination being |

| /// the parent layer. (For the first [Canvas.saveLayer] call, the parent layer |

| /// is the canvas itself.) |

| /// |

| /// See also: |

| /// |

| /// * [Paint.blendMode], which uses [BlendMode] to define the compositing |

| /// strategy. |

| enum BlendMode { |

| // This list comes from Skia's SkXfermode.h and the values (order) should be |

| // kept in sync. |

| // See: https://skia.org/docs/user/api/skpaint_overview/#SkXfermode |

| |

| /// Drop both the source and destination images, leaving nothing. |

| /// |

| /// This corresponds to the "clear" Porter-Duff operator. |

| /// |

| ///  |

| clear, |

| |

| /// Drop the destination image, only paint the source image. |

| /// |

| /// Conceptually, the destination is first cleared, then the source image is |

| /// painted. |

| /// |

| /// This corresponds to the "Copy" Porter-Duff operator. |

| /// |

| ///  |

| src, |

| |

| /// Drop the source image, only paint the destination image. |

| /// |

| /// Conceptually, the source image is discarded, leaving the destination |

| /// untouched. |

| /// |

| /// This corresponds to the "Destination" Porter-Duff operator. |

| /// |

| ///  |

| dst, |

| |

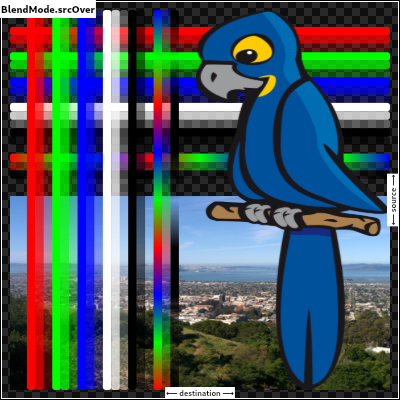

| /// Composite the source image over the destination image. |

| /// |

| /// This is the default value. It represents the most intuitive case, where |

| /// shapes are painted on top of what is below, with transparent areas showing |

| /// the destination layer. |

| /// |

| /// This corresponds to the "Source over Destination" Porter-Duff operator, |

| /// also known as the Painter's Algorithm. |

| /// |

| ///  |

| srcOver, |

| |

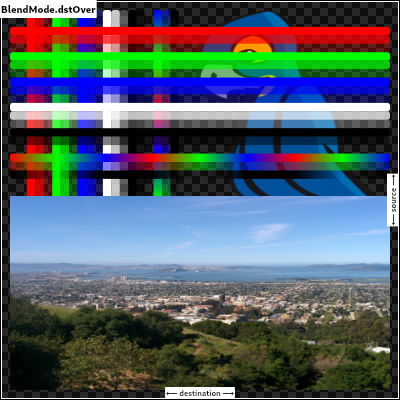

| /// Composite the source image under the destination image. |

| /// |

| /// This is the opposite of [srcOver]. |

| /// |

| /// This corresponds to the "Destination over Source" Porter-Duff operator. |

| /// |

| ///  |

| /// |

| /// This is useful when the source image should have been painted before the |

| /// destination image, but could not be. |

| dstOver, |

| |

| /// Show the source image, but only where the two images overlap. The |

| /// destination image is not rendered, it is treated merely as a mask. The |

| /// color channels of the destination are ignored, only the opacity has an |

| /// effect. |

| /// |

| /// To show the destination image instead, consider [dstIn]. |

| /// |

| /// To reverse the semantic of the mask (only showing the source where the |

| /// destination is absent, rather than where it is present), consider |

| /// [srcOut]. |

| /// |

| /// This corresponds to the "Source in Destination" Porter-Duff operator. |

| /// |

| ///  |

| srcIn, |

| |

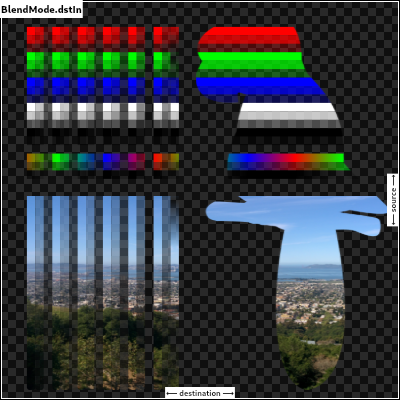

| /// Show the destination image, but only where the two images overlap. The |

| /// source image is not rendered, it is treated merely as a mask. The color |

| /// channels of the source are ignored, only the opacity has an effect. |

| /// |

| /// To show the source image instead, consider [srcIn]. |

| /// |

| /// To reverse the semantic of the mask (only showing the source where the |

| /// destination is present, rather than where it is absent), consider [dstOut]. |

| /// |

| /// This corresponds to the "Destination in Source" Porter-Duff operator. |

| /// |

| ///  |

| dstIn, |

| |

| /// Show the source image, but only where the two images do not overlap. The |

| /// destination image is not rendered, it is treated merely as a mask. The color |

| /// channels of the destination are ignored, only the opacity has an effect. |

| /// |

| /// To show the destination image instead, consider [dstOut]. |

| /// |

| /// To reverse the semantic of the mask (only showing the source where the |

| /// destination is present, rather than where it is absent), consider [srcIn]. |

| /// |

| /// This corresponds to the "Source out Destination" Porter-Duff operator. |

| /// |

| ///  |

| srcOut, |

| |

| /// Show the destination image, but only where the two images do not overlap. The |

| /// source image is not rendered, it is treated merely as a mask. The color |

| /// channels of the source are ignored, only the opacity has an effect. |

| /// |

| /// To show the source image instead, consider [srcOut]. |

| /// |

| /// To reverse the semantic of the mask (only showing the destination where the |

| /// source is present, rather than where it is absent), consider [dstIn]. |

| /// |

| /// This corresponds to the "Destination out Source" Porter-Duff operator. |

| /// |

| ///  |

| dstOut, |

| |

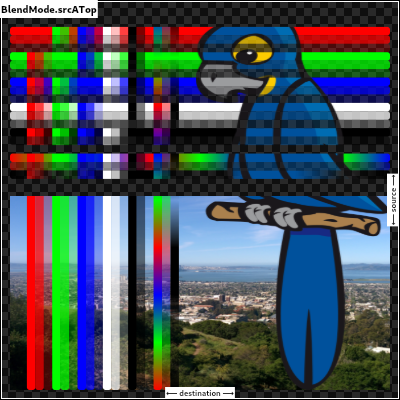

| /// Composite the source image over the destination image, but only where it |

| /// overlaps the destination. |

| /// |

| /// This corresponds to the "Source atop Destination" Porter-Duff operator. |

| /// |

| /// This is essentially the [srcOver] operator, but with the output's opacity |

| /// channel being set to that of the destination image instead of being a |

| /// combination of both image's opacity channels. |

| /// |

| /// For a variant with the destination on top instead of the source, see |

| /// [dstATop]. |

| /// |

| ///  |

| srcATop, |

| |

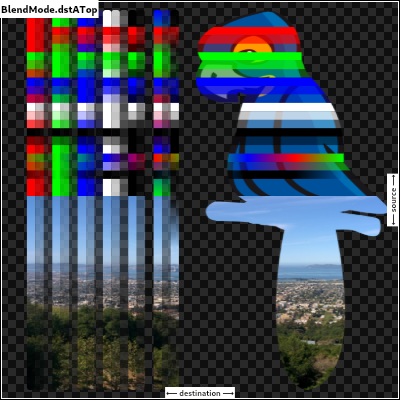

| /// Composite the destination image over the source image, but only where it |

| /// overlaps the source. |

| /// |

| /// This corresponds to the "Destination atop Source" Porter-Duff operator. |

| /// |

| /// This is essentially the [dstOver] operator, but with the output's opacity |

| /// channel being set to that of the source image instead of being a |

| /// combination of both image's opacity channels. |

| /// |

| /// For a variant with the source on top instead of the destination, see |

| /// [srcATop]. |

| /// |

| ///  |

| dstATop, |

| |

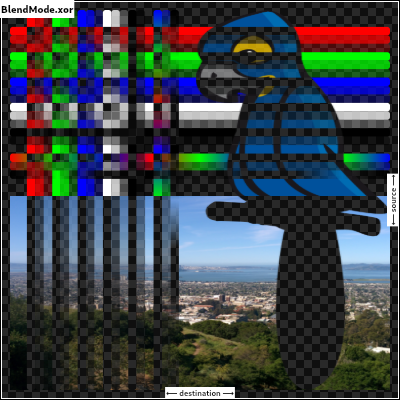

| /// Apply a bitwise `xor` operator to the source and destination images. This |

| /// leaves transparency where they would overlap. |

| /// |

| /// This corresponds to the "Source xor Destination" Porter-Duff operator. |

| /// |

| ///  |

| xor, |

| |

| /// Sum the components of the source and destination images. |

| /// |

| /// Transparency in a pixel of one of the images reduces the contribution of |

| /// that image to the corresponding output pixel, as if the color of that |

| /// pixel in that image was darker. |

| /// |

| /// This corresponds to the "Source plus Destination" Porter-Duff operator. |

| /// |

| /// This is the right blend mode for cross-fading between two images. Consider |

| /// two images A and B, and an interpolation time variable _t_ (from 0.0 to |

| /// 1.0). To cross fade between them, A should be drawn with opacity 1.0 - _t_ |

| /// into a new layer using [BlendMode.srcOver], and B should be drawn on top |

| /// of it, at opacity _t_, into the same layer, using [BlendMode.plus]. |

| /// |

| ///  |

| plus, |

| |

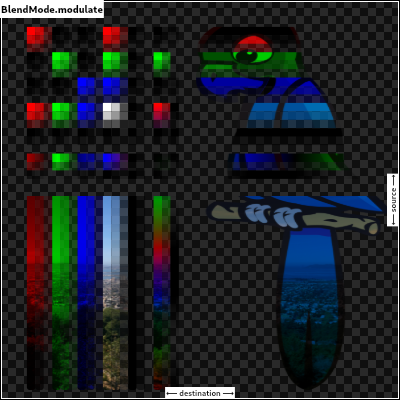

| /// Multiply the color components of the source and destination images. |

| /// |

| /// This can only result in the same or darker colors (multiplying by white, |

| /// 1.0, results in no change; multiplying by black, 0.0, results in black). |

| /// |

| /// When compositing two opaque images, this has similar effect to overlapping |

| /// two transparencies on a projector. |

| /// |

| /// For a variant that also multiplies the alpha channel, consider [multiply]. |

| /// |

| ///  |

| /// |

| /// See also: |

| /// |

| /// * [screen], which does a similar computation but inverted. |

| /// * [overlay], which combines [modulate] and [screen] to favor the |

| /// destination image. |

| /// * [hardLight], which combines [modulate] and [screen] to favor the |

| /// source image. |

| modulate, |

| |

| // Following blend modes are defined in the CSS Compositing standard. |

| |

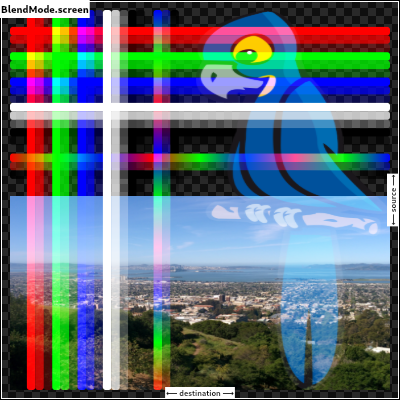

| /// Multiply the inverse of the components of the source and destination |

| /// images, and inverse the result. |

| /// |

| /// Inverting the components means that a fully saturated channel (opaque |

| /// white) is treated as the value 0.0, and values normally treated as 0.0 |

| /// (black, transparent) are treated as 1.0. |

| /// |

| /// This is essentially the same as [modulate] blend mode, but with the values |

| /// of the colors inverted before the multiplication and the result being |

| /// inverted back before rendering. |

| /// |

| /// This can only result in the same or lighter colors (multiplying by black, |

| /// 1.0, results in no change; multiplying by white, 0.0, results in white). |

| /// Similarly, in the alpha channel, it can only result in more opaque colors. |

| /// |

| /// This has similar effect to two projectors displaying their images on the |

| /// same screen simultaneously. |

| /// |

| ///  |

| /// |

| /// See also: |

| /// |

| /// * [modulate], which does a similar computation but without inverting the |

| /// values. |

| /// * [overlay], which combines [modulate] and [screen] to favor the |

| /// destination image. |

| /// * [hardLight], which combines [modulate] and [screen] to favor the |

| /// source image. |

| screen, // The last coeff mode. |

| |

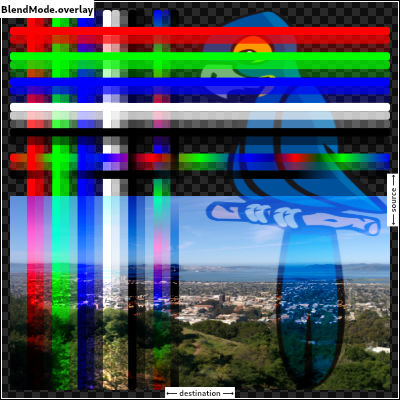

| /// Multiply the components of the source and destination images after |

| /// adjusting them to favor the destination. |

| /// |

| /// Specifically, if the destination value is smaller, this multiplies it with |

| /// the source value, whereas is the source value is smaller, it multiplies |

| /// the inverse of the source value with the inverse of the destination value, |

| /// then inverts the result. |

| /// |

| /// Inverting the components means that a fully saturated channel (opaque |

| /// white) is treated as the value 0.0, and values normally treated as 0.0 |

| /// (black, transparent) are treated as 1.0. |

| /// |

| ///  |

| /// |

| /// See also: |

| /// |

| /// * [modulate], which always multiplies the values. |

| /// * [screen], which always multiplies the inverses of the values. |

| /// * [hardLight], which is similar to [overlay] but favors the source image |

| /// instead of the destination image. |

| overlay, |

| |

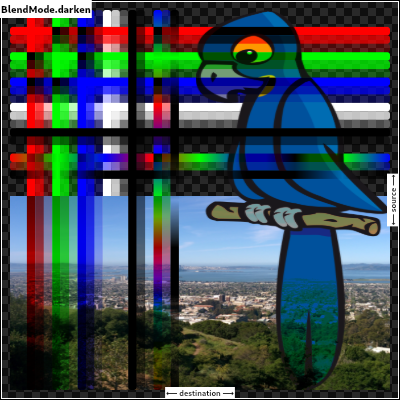

| /// Composite the source and destination image by choosing the lowest value |

| /// from each color channel. |

| /// |

| /// The opacity of the output image is computed in the same way as for |

| /// [srcOver]. |

| /// |

| ///  |

| darken, |

| |

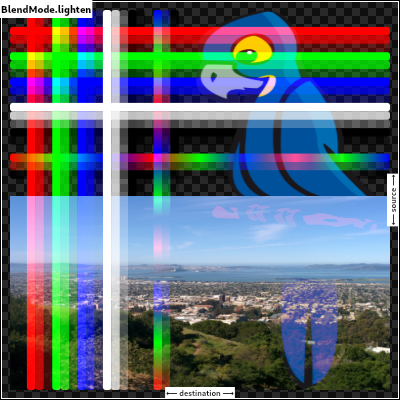

| /// Composite the source and destination image by choosing the highest value |

| /// from each color channel. |

| /// |

| /// The opacity of the output image is computed in the same way as for |

| /// [srcOver]. |

| /// |

| ///  |

| lighten, |

| |

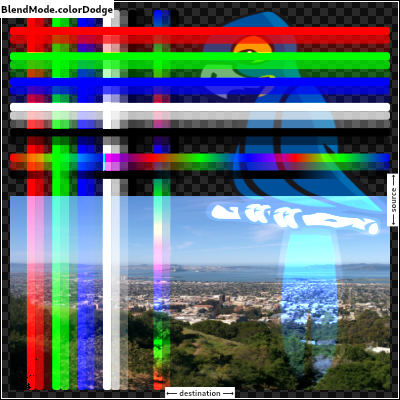

| /// Divide the destination by the inverse of the source. |

| /// |

| /// Inverting the components means that a fully saturated channel (opaque |

| /// white) is treated as the value 0.0, and values normally treated as 0.0 |

| /// (black, transparent) are treated as 1.0. |

| /// |

| ///  |

| colorDodge, |

| |

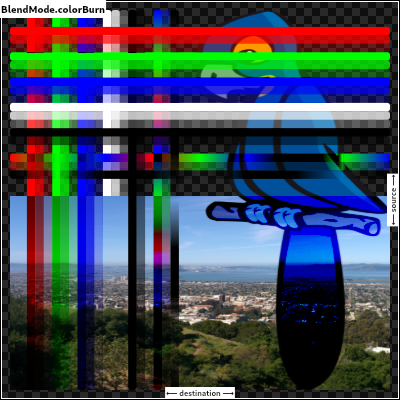

| /// Divide the inverse of the destination by the source, and inverse the result. |

| /// |

| /// Inverting the components means that a fully saturated channel (opaque |

| /// white) is treated as the value 0.0, and values normally treated as 0.0 |

| /// (black, transparent) are treated as 1.0. |

| /// |

| ///  |

| colorBurn, |

| |

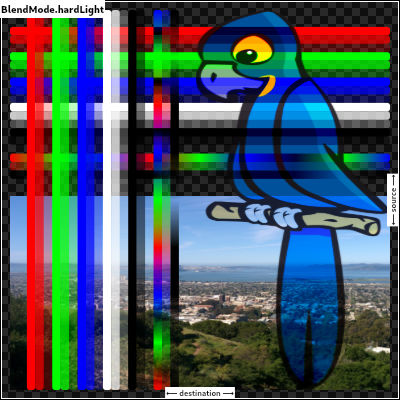

| /// Multiply the components of the source and destination images after |

| /// adjusting them to favor the source. |

| /// |

| /// Specifically, if the source value is smaller, this multiplies it with the |

| /// destination value, whereas is the destination value is smaller, it |

| /// multiplies the inverse of the destination value with the inverse of the |

| /// source value, then inverts the result. |

| /// |

| /// Inverting the components means that a fully saturated channel (opaque |

| /// white) is treated as the value 0.0, and values normally treated as 0.0 |

| /// (black, transparent) are treated as 1.0. |

| /// |

| ///  |

| /// |

| /// See also: |

| /// |

| /// * [modulate], which always multiplies the values. |

| /// * [screen], which always multiplies the inverses of the values. |

| /// * [overlay], which is similar to [hardLight] but favors the destination |

| /// image instead of the source image. |

| hardLight, |

| |

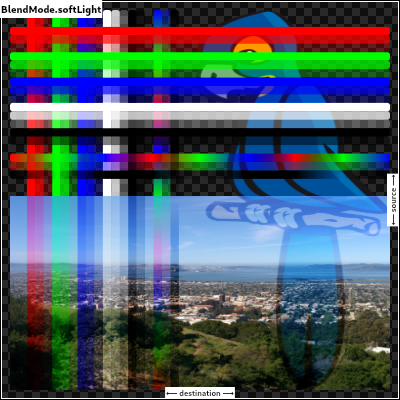

| /// Use [colorDodge] for source values below 0.5 and [colorBurn] for source |

| /// values above 0.5. |

| /// |

| /// This results in a similar but softer effect than [overlay]. |

| /// |

| ///  |

| /// |

| /// See also: |

| /// |

| /// * [color], which is a more subtle tinting effect. |

| softLight, |

| |

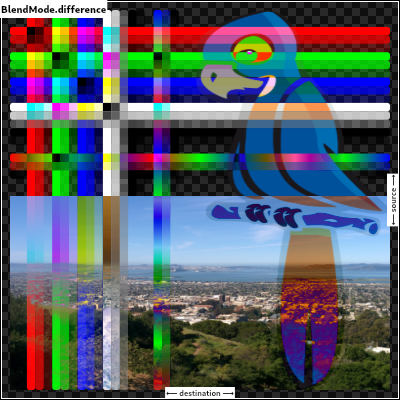

| /// Subtract the smaller value from the bigger value for each channel. |

| /// |

| /// Compositing black has no effect; compositing white inverts the colors of |

| /// the other image. |

| /// |

| /// The opacity of the output image is computed in the same way as for |

| /// [srcOver]. |

| /// |

| /// The effect is similar to [exclusion] but harsher. |

| /// |

| ///  |

| difference, |

| |

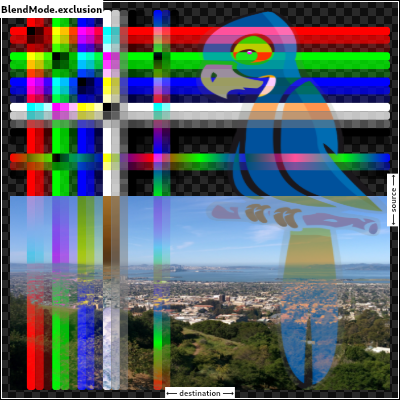

| /// Subtract double the product of the two images from the sum of the two |

| /// images. |

| /// |

| /// Compositing black has no effect; compositing white inverts the colors of |

| /// the other image. |

| /// |

| /// The opacity of the output image is computed in the same way as for |

| /// [srcOver]. |

| /// |

| /// The effect is similar to [difference] but softer. |

| /// |

| ///  |

| exclusion, |

| |

| /// Multiply the components of the source and destination images, including |

| /// the alpha channel. |

| /// |

| /// This can only result in the same or darker colors (multiplying by white, |

| /// 1.0, results in no change; multiplying by black, 0.0, results in black). |

| /// |

| /// Since the alpha channel is also multiplied, a fully-transparent pixel |

| /// (opacity 0.0) in one image results in a fully transparent pixel in the |

| /// output. This is similar to [dstIn], but with the colors combined. |

| /// |

| /// For a variant that multiplies the colors but does not multiply the alpha |

| /// channel, consider [modulate]. |

| /// |

| ///  |

| multiply, // The last separable mode. |

| |

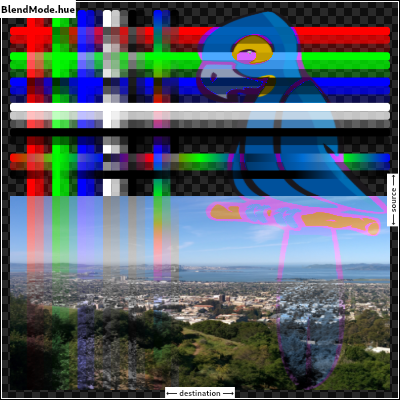

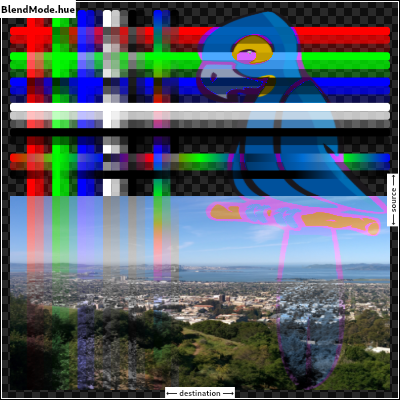

| /// Take the hue of the source image, and the saturation and luminosity of the |

| /// destination image. |

| /// |

| /// The effect is to tint the destination image with the source image. |

| /// |

| /// The opacity of the output image is computed in the same way as for |

| /// [srcOver]. Regions that are entirely transparent in the source image take |

| /// their hue from the destination. |

| /// |

| ///  |

| /// |

| /// See also: |

| /// |

| /// * [color], which is a similar but stronger effect as it also applies the |

| /// saturation of the source image. |

| /// * [HSVColor], which allows colors to be expressed using Hue rather than |

| /// the red/green/blue channels of [Color]. |

| hue, |

| |

| /// Take the saturation of the source image, and the hue and luminosity of the |

| /// destination image. |

| /// |

| /// The opacity of the output image is computed in the same way as for |

| /// [srcOver]. Regions that are entirely transparent in the source image take |

| /// their saturation from the destination. |

| /// |

| ///  |

| /// |

| /// See also: |

| /// |

| /// * [color], which also applies the hue of the source image. |

| /// * [luminosity], which applies the luminosity of the source image to the |

| /// destination. |

| saturation, |

| |

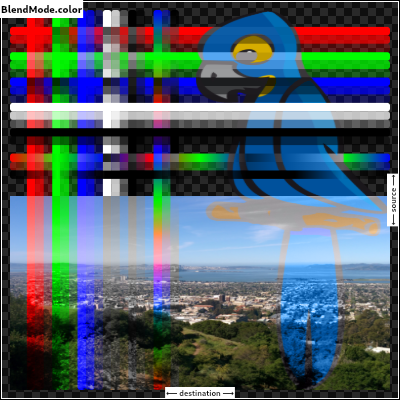

| /// Take the hue and saturation of the source image, and the luminosity of the |

| /// destination image. |

| /// |

| /// The effect is to tint the destination image with the source image. |

| /// |

| /// The opacity of the output image is computed in the same way as for |

| /// [srcOver]. Regions that are entirely transparent in the source image take |

| /// their hue and saturation from the destination. |

| /// |

| ///  |

| /// |

| /// See also: |

| /// |

| /// * [hue], which is a similar but weaker effect. |

| /// * [softLight], which is a similar tinting effect but also tints white. |

| /// * [saturation], which only applies the saturation of the source image. |

| color, |

| |

| /// Take the luminosity of the source image, and the hue and saturation of the |

| /// destination image. |

| /// |

| /// The opacity of the output image is computed in the same way as for |

| /// [srcOver]. Regions that are entirely transparent in the source image take |

| /// their luminosity from the destination. |

| /// |

| ///  |

| /// |

| /// See also: |

| /// |

| /// * [saturation], which applies the saturation of the source image to the |

| /// destination. |

| /// * [ImageFilter.blur], which can be used with [BackdropFilter] for a |

| /// related effect. |

| luminosity, |

| } |

| |

| /// Quality levels for image sampling in [ImageFilter] and [Shader] objects that sample |

| /// images and for [Canvas] operations that render images. |

| /// |

| /// When scaling up typically the quality is lowest at [none], higher at [low] and [medium], |

| /// and for very large scale factors (over 10x) the highest at [high]. |

| /// |

| /// When scaling down, [medium] provides the best quality especially when scaling an |

| /// image to less than half its size or for animating the scale factor between such |

| /// reductions. Otherwise, [low] and [high] provide similar effects for reductions of |

| /// between 50% and 100% but the image may lose detail and have dropouts below 50%. |

| /// |

| /// To get high quality when scaling images up and down, or when the scale is |

| /// unknown, [medium] is typically a good balanced choice. |

| /// |

| ///  |

| /// |

| /// When building for the web using the `--web-renderer=html` option, filter |

| /// quality has no effect. All images are rendered using the respective |

| /// browser's default setting. |

| /// |

| /// See also: |

| /// |

| /// * [Paint.filterQuality], which is used to pass [FilterQuality] to the |

| /// engine while using drawImage calls on a [Canvas]. |

| /// * [ImageShader]. |

| /// * [ImageFilter.matrix]. |

| /// * [Canvas.drawImage]. |

| /// * [Canvas.drawImageRect]. |

| /// * [Canvas.drawImageNine]. |

| /// * [Canvas.drawAtlas]. |

| enum FilterQuality { |

| // This list and the values (order) should be kept in sync with the equivalent list |

| // in lib/ui/painting/image_filter.cc |

| |

| /// The fastest filtering method, albeit also the lowest quality. |

| /// |

| /// This value results in a "Nearest Neighbor" algorithm which just |

| /// repeats or eliminates pixels as an image is scaled up or down. |

| none, |

| |

| /// Better quality than [none], faster than [medium]. |

| /// |

| /// This value results in a "Bilinear" algorithm which smoothly |

| /// interpolates between pixels in an image. |

| low, |

| |

| /// The best all around filtering method that is only worse than [high] |

| /// at extremely large scale factors. |

| /// |

| /// This value improves upon the "Bilinear" algorithm specified by [low] |

| /// by utilizing a Mipmap that pre-computes high quality lower resolutions |

| /// of the image at half (and quarter and eighth, etc.) sizes and then |

| /// blends between those to prevent loss of detail at small scale sizes. |

| /// |

| /// {@template dart.ui.filterQuality.seeAlso} |

| /// See also: |

| /// |

| /// * [FilterQuality] class-level documentation that goes into detail about |

| /// relative qualities of the constant values. |

| /// {@endtemplate} |

| medium, |

| |

| /// Best possible quality when scaling up images by scale factors larger than |

| /// 5-10x. |

| /// |

| /// When images are scaled down, this can be worse than [medium] for scales |

| /// smaller than 0.5x, or when animating the scale factor. |

| /// |

| /// This option is also the slowest. |

| /// |

| /// This value results in a standard "Bicubic" algorithm which uses a 3rd order |

| /// equation to smooth the abrupt transitions between pixels while preserving |

| /// some of the sense of an edge and avoiding sharp peaks in the result. |

| /// |

| /// {@macro dart.ui.filterQuality.seeAlso} |

| high, |

| } |

| |

| /// Styles to use for line endings. |

| /// |

| /// See also: |

| /// |

| /// * [Paint.strokeCap] for how this value is used. |

| /// * [StrokeJoin] for the different kinds of line segment joins. |

| // These enum values must be kept in sync with DlStrokeCap. |

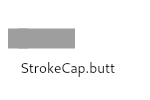

| enum StrokeCap { |

| /// Begin and end contours with a flat edge and no extension. |

| /// |

| ///  |

| /// |

| /// Compare to the [square] cap, which has the same shape, but extends past |

| /// the end of the line by half a stroke width. |

| butt, |

| |

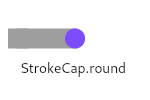

| /// Begin and end contours with a semi-circle extension. |

| /// |

| ///  |

| /// |

| /// The cap is colored in the diagram above to highlight it: in normal use it |

| /// is the same color as the line. |

| round, |

| |

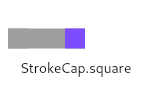

| /// Begin and end contours with a half square extension. This is |

| /// similar to extending each contour by half the stroke width (as |

| /// given by [Paint.strokeWidth]). |

| /// |

| ///  |

| /// |

| /// The cap is colored in the diagram above to highlight it: in normal use it |

| /// is the same color as the line. |

| /// |

| /// Compare to the [butt] cap, which has the same shape, but doesn't extend |

| /// past the end of the line. |

| square, |

| } |

| |

| /// Styles to use for line segment joins. |

| /// |

| /// This only affects line joins for polygons drawn by [Canvas.drawPath] and |

| /// rectangles, not points drawn as lines with [Canvas.drawPoints]. |

| /// |

| /// See also: |

| /// |

| /// * [Paint.strokeJoin] and [Paint.strokeMiterLimit] for how this value is |

| /// used. |

| /// * [StrokeCap] for the different kinds of line endings. |

| // These enum values must be kept in sync with DlStrokeJoin. |

| enum StrokeJoin { |

| /// Joins between line segments form sharp corners. |

| /// |

| /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/miter_4_join.mp4} |

| /// |

| /// The center of the line segment is colored in the diagram above to |

| /// highlight the join, but in normal usage the join is the same color as the |

| /// line. |

| /// |

| /// See also: |

| /// |

| /// * [Paint.strokeJoin], used to set the line segment join style to this |

| /// value. |

| /// * [Paint.strokeMiterLimit], used to define when a miter is drawn instead |

| /// of a bevel when the join is set to this value. |

| miter, |

| |

| /// Joins between line segments are semi-circular. |

| /// |

| /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/round_join.mp4} |

| /// |

| /// The center of the line segment is colored in the diagram above to |

| /// highlight the join, but in normal usage the join is the same color as the |

| /// line. |

| /// |

| /// See also: |

| /// |

| /// * [Paint.strokeJoin], used to set the line segment join style to this |

| /// value. |

| round, |

| |

| /// Joins between line segments connect the corners of the butt ends of the |

| /// line segments to give a beveled appearance. |

| /// |

| /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/bevel_join.mp4} |

| /// |

| /// The center of the line segment is colored in the diagram above to |

| /// highlight the join, but in normal usage the join is the same color as the |

| /// line. |

| /// |

| /// See also: |

| /// |

| /// * [Paint.strokeJoin], used to set the line segment join style to this |

| /// value. |

| bevel, |

| } |

| |

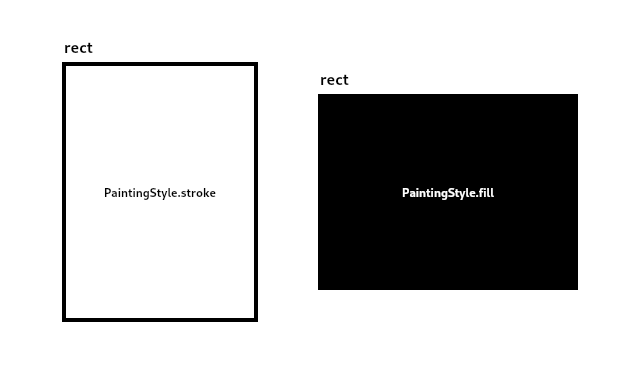

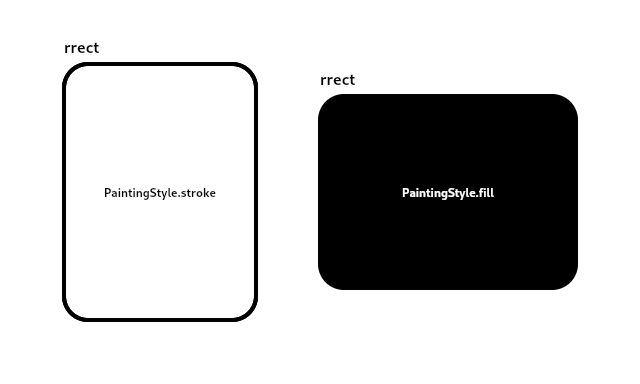

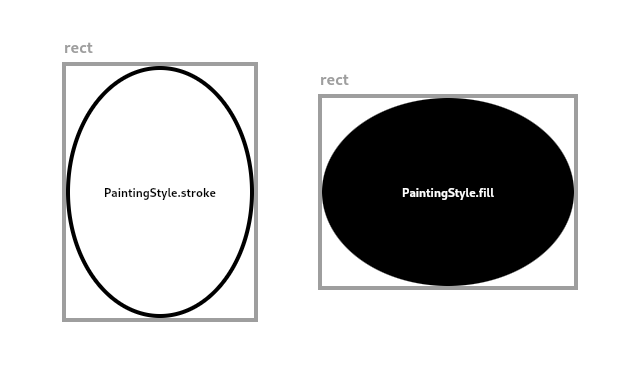

| /// Strategies for painting shapes and paths on a canvas. |

| /// |

| /// See [Paint.style]. |

| // These enum values must be kept in sync with DlDrawStyle. |

| enum PaintingStyle { |

| // This list comes from dl_paint.h and the values (order) should be kept |

| // in sync. |

| |

| /// Apply the [Paint] to the inside of the shape. For example, when |

| /// applied to the [Canvas.drawCircle] call, this results in a disc |

| /// of the given size being painted. |

| fill, |

| |

| /// Apply the [Paint] to the edge of the shape. For example, when |

| /// applied to the [Canvas.drawCircle] call, this results is a hoop |

| /// of the given size being painted. The line drawn on the edge will |

| /// be the width given by the [Paint.strokeWidth] property. |

| stroke, |

| } |

| |

| /// Different ways to clip a widget's content. |

| enum Clip { |

| /// No clip at all. |

| /// |

| /// This is the default option for most widgets: if the content does not |

| /// overflow the widget boundary, don't pay any performance cost for clipping. |

| /// |

| /// If the content does overflow, please explicitly specify the following |

| /// [Clip] options: |

| /// * [hardEdge], which is the fastest clipping, but with lower fidelity. |

| /// * [antiAlias], which is a little slower than [hardEdge], but with smoothed edges. |

| /// * [antiAliasWithSaveLayer], which is much slower than [antiAlias], and should |

| /// rarely be used. |

| none, |

| |

| /// Clip, but do not apply anti-aliasing. |

| /// |

| /// This mode enables clipping, but curves and non-axis-aligned straight lines will be |

| /// jagged as no effort is made to anti-alias. |

| /// |

| /// Faster than other clipping modes, but slower than [none]. |

| /// |

| /// This is a reasonable choice when clipping is needed, if the container is an axis- |

| /// aligned rectangle or an axis-aligned rounded rectangle with very small corner radii. |

| /// |

| /// See also: |

| /// |

| /// * [antiAlias], which is more reasonable when clipping is needed and the shape is not |

| /// an axis-aligned rectangle. |

| hardEdge, |

| |

| /// Clip with anti-aliasing. |

| /// |

| /// This mode has anti-aliased clipping edges to achieve a smoother look. |

| /// |

| /// It' s much faster than [antiAliasWithSaveLayer], but slower than [hardEdge]. |

| /// |

| /// This will be the common case when dealing with circles and arcs. |

| /// |

| /// Different from [hardEdge] and [antiAliasWithSaveLayer], this clipping may have |

| /// bleeding edge artifacts. |

| /// (See https://fiddle.skia.org/c/21cb4c2b2515996b537f36e7819288ae for an example.) |

| /// |

| /// See also: |

| /// |

| /// * [hardEdge], which is a little faster, but with lower fidelity. |

| /// * [antiAliasWithSaveLayer], which is much slower, but can avoid the |

| /// bleeding edges if there's no other way. |

| /// * [Paint.isAntiAlias], which is the anti-aliasing switch for general draw operations. |

| antiAlias, |

| |

| /// Clip with anti-aliasing and saveLayer immediately following the clip. |

| /// |

| /// This mode not only clips with anti-aliasing, but also allocates an offscreen |

| /// buffer. All subsequent paints are carried out on that buffer before finally |

| /// being clipped and composited back. |

| /// |

| /// This is very slow. It has no bleeding edge artifacts (that [antiAlias] has) |

| /// but it changes the semantics as an offscreen buffer is now introduced. |

| /// (See https://github.com/flutter/flutter/issues/18057#issuecomment-394197336 |

| /// for a difference between paint without saveLayer and paint with saveLayer.) |

| /// |

| /// This will be only rarely needed. One case where you might need this is if |

| /// you have an image overlaid on a very different background color. In these |

| /// cases, consider whether you can avoid overlaying multiple colors in one |

| /// spot (e.g. by having the background color only present where the image is |

| /// absent). If you can, [antiAlias] would be fine and much faster. |

| /// |

| /// See also: |

| /// |

| /// * [antiAlias], which is much faster, and has similar clipping results. |

| antiAliasWithSaveLayer, |

| } |

| |

| /// A description of the style to use when drawing on a [Canvas]. |

| /// |

| /// Most APIs on [Canvas] take a [Paint] object to describe the style |

| /// to use for that operation. |

| final class Paint { |

| /// Constructs an empty [Paint] object with all fields initialized to |

| /// their defaults. |

| Paint(); |

| |

| // Paint objects are encoded in two buffers: |

| // |

| // * _data is binary data in four-byte fields, each of which is either a |

| // uint32_t or a float. The default value for each field is encoded as |

| // zero to make initialization trivial. Most values already have a default |

| // value of zero, but some, such as color, have a non-zero default value. |

| // To encode or decode these values, XOR the value with the default value. |

| // |

| // * _objects is a list of unencodable objects, typically wrappers for native |

| // objects. The objects are simply stored in the list without any additional |

| // encoding. |

| // |

| // The binary format must match the deserialization code in paint.cc. |

| |

| // C++ unit tests access this. |

| @pragma('vm:entry-point') |

| final ByteData _data = ByteData(_kDataByteCount); |

| |

| static const int _kIsAntiAliasIndex = 0; |

| static const int _kColorIndex = 1; |

| static const int _kBlendModeIndex = 2; |

| static const int _kStyleIndex = 3; |

| static const int _kStrokeWidthIndex = 4; |

| static const int _kStrokeCapIndex = 5; |

| static const int _kStrokeJoinIndex = 6; |

| static const int _kStrokeMiterLimitIndex = 7; |

| static const int _kFilterQualityIndex = 8; |

| static const int _kMaskFilterIndex = 9; |

| static const int _kMaskFilterBlurStyleIndex = 10; |

| static const int _kMaskFilterSigmaIndex = 11; |

| static const int _kInvertColorIndex = 12; |

| |

| static const int _kIsAntiAliasOffset = _kIsAntiAliasIndex << 2; |

| static const int _kColorOffset = _kColorIndex << 2; |

| static const int _kBlendModeOffset = _kBlendModeIndex << 2; |

| static const int _kStyleOffset = _kStyleIndex << 2; |

| static const int _kStrokeWidthOffset = _kStrokeWidthIndex << 2; |

| static const int _kStrokeCapOffset = _kStrokeCapIndex << 2; |

| static const int _kStrokeJoinOffset = _kStrokeJoinIndex << 2; |

| static const int _kStrokeMiterLimitOffset = _kStrokeMiterLimitIndex << 2; |

| static const int _kFilterQualityOffset = _kFilterQualityIndex << 2; |

| static const int _kMaskFilterOffset = _kMaskFilterIndex << 2; |

| static const int _kMaskFilterBlurStyleOffset = _kMaskFilterBlurStyleIndex << 2; |

| static const int _kMaskFilterSigmaOffset = _kMaskFilterSigmaIndex << 2; |

| static const int _kInvertColorOffset = _kInvertColorIndex << 2; |

| |

| // If you add more fields, remember to update _kDataByteCount. |

| static const int _kDataByteCount = 52; // 4 * (last index + 1). |

| |

| // Binary format must match the deserialization code in paint.cc. |

| // C++ unit tests access this. |

| @pragma('vm:entry-point') |

| List<Object?>? _objects; |

| |

| List<Object?> _ensureObjectsInitialized() { |

| return _objects ??= List<Object?>.filled(_kObjectCount, null); |

| } |

| |

| static const int _kShaderIndex = 0; |

| static const int _kColorFilterIndex = 1; |

| static const int _kImageFilterIndex = 2; |

| static const int _kObjectCount = 3; // Must be one larger than the largest index. |

| |

| /// Whether to apply anti-aliasing to lines and images drawn on the |

| /// canvas. |

| /// |

| /// Defaults to true. |

| bool get isAntiAlias { |

| return _data.getInt32(_kIsAntiAliasOffset, _kFakeHostEndian) == 0; |

| } |

| set isAntiAlias(bool value) { |

| // We encode true as zero and false as one because the default value, which |

| // we always encode as zero, is true. |

| final int encoded = value ? 0 : 1; |

| _data.setInt32(_kIsAntiAliasOffset, encoded, _kFakeHostEndian); |

| } |

| |

| // Must be kept in sync with the default in paint.cc. |

| static const int _kColorDefault = 0xFF000000; |

| |

| /// The color to use when stroking or filling a shape. |

| /// |

| /// Defaults to opaque black. |

| /// |

| /// See also: |

| /// |

| /// * [style], which controls whether to stroke or fill (or both). |

| /// * [colorFilter], which overrides [color]. |

| /// * [shader], which overrides [color] with more elaborate effects. |

| /// |

| /// This color is not used when compositing. To colorize a layer, use |

| /// [colorFilter]. |

| Color get color { |

| final int encoded = _data.getInt32(_kColorOffset, _kFakeHostEndian); |

| return Color(encoded ^ _kColorDefault); |

| } |

| set color(Color value) { |

| final int encoded = value.value ^ _kColorDefault; |

| _data.setInt32(_kColorOffset, encoded, _kFakeHostEndian); |

| } |

| |

| // Must be kept in sync with the default in paint.cc. |

| static final int _kBlendModeDefault = BlendMode.srcOver.index; |

| |

| /// A blend mode to apply when a shape is drawn or a layer is composited. |

| /// |

| /// The source colors are from the shape being drawn (e.g. from |

| /// [Canvas.drawPath]) or layer being composited (the graphics that were drawn |

| /// between the [Canvas.saveLayer] and [Canvas.restore] calls), after applying |

| /// the [colorFilter], if any. |

| /// |

| /// The destination colors are from the background onto which the shape or |

| /// layer is being composited. |

| /// |

| /// Defaults to [BlendMode.srcOver]. |

| /// |

| /// See also: |

| /// |

| /// * [Canvas.saveLayer], which uses its [Paint]'s [blendMode] to composite |

| /// the layer when [Canvas.restore] is called. |

| /// * [BlendMode], which discusses the user of [Canvas.saveLayer] with |

| /// [blendMode]. |

| BlendMode get blendMode { |

| final int encoded = _data.getInt32(_kBlendModeOffset, _kFakeHostEndian); |

| return BlendMode.values[encoded ^ _kBlendModeDefault]; |

| } |

| set blendMode(BlendMode value) { |

| final int encoded = value.index ^ _kBlendModeDefault; |

| _data.setInt32(_kBlendModeOffset, encoded, _kFakeHostEndian); |

| } |

| |

| /// Whether to paint inside shapes, the edges of shapes, or both. |

| /// |

| /// Defaults to [PaintingStyle.fill]. |

| PaintingStyle get style { |

| return PaintingStyle.values[_data.getInt32(_kStyleOffset, _kFakeHostEndian)]; |

| } |

| set style(PaintingStyle value) { |

| final int encoded = value.index; |

| _data.setInt32(_kStyleOffset, encoded, _kFakeHostEndian); |

| } |

| |

| /// How wide to make edges drawn when [style] is set to |

| /// [PaintingStyle.stroke]. The width is given in logical pixels measured in |

| /// the direction orthogonal to the direction of the path. |

| /// |

| /// Defaults to 0.0, which correspond to a hairline width. |

| double get strokeWidth { |

| return _data.getFloat32(_kStrokeWidthOffset, _kFakeHostEndian); |

| } |

| set strokeWidth(double value) { |

| final double encoded = value; |

| _data.setFloat32(_kStrokeWidthOffset, encoded, _kFakeHostEndian); |

| } |

| |

| /// The kind of finish to place on the end of lines drawn when |

| /// [style] is set to [PaintingStyle.stroke]. |

| /// |

| /// Defaults to [StrokeCap.butt], i.e. no caps. |

| StrokeCap get strokeCap { |

| return StrokeCap.values[_data.getInt32(_kStrokeCapOffset, _kFakeHostEndian)]; |

| } |

| set strokeCap(StrokeCap value) { |

| final int encoded = value.index; |

| _data.setInt32(_kStrokeCapOffset, encoded, _kFakeHostEndian); |

| } |

| |

| /// The kind of finish to place on the joins between segments. |

| /// |

| /// This applies to paths drawn when [style] is set to [PaintingStyle.stroke], |

| /// It does not apply to points drawn as lines with [Canvas.drawPoints]. |

| /// |

| /// Defaults to [StrokeJoin.miter], i.e. sharp corners. |

| /// |

| /// Some examples of joins: |

| /// |

| /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/miter_4_join.mp4} |

| /// |

| /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/round_join.mp4} |

| /// |

| /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/bevel_join.mp4} |

| /// |

| /// The centers of the line segments are colored in the diagrams above to |

| /// highlight the joins, but in normal usage the join is the same color as the |

| /// line. |

| /// |

| /// See also: |

| /// |

| /// * [strokeMiterLimit] to control when miters are replaced by bevels when |

| /// this is set to [StrokeJoin.miter]. |

| /// * [strokeCap] to control what is drawn at the ends of the stroke. |

| /// * [StrokeJoin] for the definitive list of stroke joins. |

| StrokeJoin get strokeJoin { |

| return StrokeJoin.values[_data.getInt32(_kStrokeJoinOffset, _kFakeHostEndian)]; |

| } |

| set strokeJoin(StrokeJoin value) { |

| final int encoded = value.index; |

| _data.setInt32(_kStrokeJoinOffset, encoded, _kFakeHostEndian); |

| } |

| |

| // Must be kept in sync with the default in paint.cc. |

| static const double _kStrokeMiterLimitDefault = 4.0; |

| |

| /// The limit for miters to be drawn on segments when the join is set to |

| /// [StrokeJoin.miter] and the [style] is set to [PaintingStyle.stroke]. If |

| /// this limit is exceeded, then a [StrokeJoin.bevel] join will be drawn |

| /// instead. This may cause some 'popping' of the corners of a path if the |

| /// angle between line segments is animated, as seen in the diagrams below. |

| /// |

| /// This limit is expressed as a limit on the length of the miter. |

| /// |

| /// Defaults to 4.0. Using zero as a limit will cause a [StrokeJoin.bevel] |

| /// join to be used all the time. |

| /// |

| /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/miter_0_join.mp4} |

| /// |

| /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/miter_4_join.mp4} |

| /// |

| /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/miter_6_join.mp4} |

| /// |

| /// The centers of the line segments are colored in the diagrams above to |

| /// highlight the joins, but in normal usage the join is the same color as the |

| /// line. |

| /// |

| /// See also: |

| /// |

| /// * [strokeJoin] to control the kind of finish to place on the joins |

| /// between segments. |

| /// * [strokeCap] to control what is drawn at the ends of the stroke. |

| double get strokeMiterLimit { |

| return _data.getFloat32(_kStrokeMiterLimitOffset, _kFakeHostEndian); |

| } |

| set strokeMiterLimit(double value) { |

| final double encoded = value - _kStrokeMiterLimitDefault; |

| _data.setFloat32(_kStrokeMiterLimitOffset, encoded, _kFakeHostEndian); |

| } |

| |

| /// A mask filter (for example, a blur) to apply to a shape after it has been |

| /// drawn but before it has been composited into the image. |

| /// |

| /// See [MaskFilter] for details. |

| MaskFilter? get maskFilter { |

| switch (_data.getInt32(_kMaskFilterOffset, _kFakeHostEndian)) { |

| case MaskFilter._TypeNone: |

| return null; |

| case MaskFilter._TypeBlur: |

| return MaskFilter.blur( |

| BlurStyle.values[_data.getInt32(_kMaskFilterBlurStyleOffset, _kFakeHostEndian)], |

| _data.getFloat32(_kMaskFilterSigmaOffset, _kFakeHostEndian), |

| ); |

| } |

| return null; |

| } |

| set maskFilter(MaskFilter? value) { |

| if (value == null) { |

| _data.setInt32(_kMaskFilterOffset, MaskFilter._TypeNone, _kFakeHostEndian); |

| _data.setInt32(_kMaskFilterBlurStyleOffset, 0, _kFakeHostEndian); |

| _data.setFloat32(_kMaskFilterSigmaOffset, 0.0, _kFakeHostEndian); |

| } else { |

| // For now we only support one kind of MaskFilter, so we don't need to |

| // check what the type is if it's not null. |

| _data.setInt32(_kMaskFilterOffset, MaskFilter._TypeBlur, _kFakeHostEndian); |

| _data.setInt32(_kMaskFilterBlurStyleOffset, value._style.index, _kFakeHostEndian); |

| _data.setFloat32(_kMaskFilterSigmaOffset, value._sigma, _kFakeHostEndian); |

| } |

| } |

| |

| /// Controls the performance vs quality trade-off to use when sampling bitmaps, |

| /// as with an [ImageShader], or when drawing images, as with [Canvas.drawImage], |

| /// [Canvas.drawImageRect], [Canvas.drawImageNine] or [Canvas.drawAtlas]. |

| /// |

| /// Defaults to [FilterQuality.none]. |

| // TODO(ianh): verify that the image drawing methods actually respect this |

| FilterQuality get filterQuality { |

| return FilterQuality.values[_data.getInt32(_kFilterQualityOffset, _kFakeHostEndian)]; |

| } |

| set filterQuality(FilterQuality value) { |

| final int encoded = value.index; |

| _data.setInt32(_kFilterQualityOffset, encoded, _kFakeHostEndian); |

| } |

| |

| /// The shader to use when stroking or filling a shape. |

| /// |

| /// When this is null, the [color] is used instead. |

| /// |

| /// See also: |

| /// |

| /// * [Gradient], a shader that paints a color gradient. |

| /// * [ImageShader], a shader that tiles an [Image]. |

| /// * [colorFilter], which overrides [shader]. |

| /// * [color], which is used if [shader] and [colorFilter] are null. |

| Shader? get shader { |

| return _objects?[_kShaderIndex] as Shader?; |

| } |

| set shader(Shader? value) { |

| assert(() { |

| assert( |

| value == null || !value.debugDisposed, |

| 'Attempted to set a disposed shader to $this', |

| ); |

| return true; |

| }()); |

| assert(() { |

| if (value is FragmentShader) { |

| if (!value._validateSamplers()) { |

| throw Exception('Invalid FragmentShader ${value._debugName ?? ''}: missing sampler'); |

| } |

| } |

| return true; |

| }()); |

| _ensureObjectsInitialized()[_kShaderIndex] = value; |

| } |

| |

| /// A color filter to apply when a shape is drawn or when a layer is |

| /// composited. |

| /// |

| /// See [ColorFilter] for details. |

| /// |

| /// When a shape is being drawn, [colorFilter] overrides [color] and [shader]. |

| ColorFilter? get colorFilter { |

| final _ColorFilter? nativeFilter = _objects?[_kColorFilterIndex] as _ColorFilter?; |

| return nativeFilter?.creator; |

| } |

| set colorFilter(ColorFilter? value) { |

| final _ColorFilter? nativeFilter = value?._toNativeColorFilter(); |

| if (nativeFilter == null) { |

| if (_objects != null) { |

| _objects![_kColorFilterIndex] = null; |

| } |

| } else { |

| _ensureObjectsInitialized()[_kColorFilterIndex] = nativeFilter; |

| } |

| } |

| |

| /// The [ImageFilter] to use when drawing raster images. |

| /// |

| /// For example, to blur an image using [Canvas.drawImage], apply an |

| /// [ImageFilter.blur]: |

| /// |

| /// ```dart |

| /// void paint(Canvas canvas, Size size) { |

| /// canvas.drawImage( |

| /// _image, |

| /// ui.Offset.zero, |

| /// Paint()..imageFilter = ui.ImageFilter.blur(sigmaX: 0.5, sigmaY: 0.5), |

| /// ); |

| /// } |

| /// ``` |

| /// |

| /// See also: |

| /// |

| /// * [MaskFilter], which is used for drawing geometry. |

| ImageFilter? get imageFilter { |

| final _ImageFilter? nativeFilter = _objects?[_kImageFilterIndex] as _ImageFilter?; |

| return nativeFilter?.creator; |

| } |

| set imageFilter(ImageFilter? value) { |

| if (value == null) { |

| if (_objects != null) { |

| _objects![_kImageFilterIndex] = null; |

| } |

| } else { |

| final List<Object?> objects = _ensureObjectsInitialized(); |

| final _ImageFilter? imageFilter = objects[_kImageFilterIndex] as _ImageFilter?; |

| if (imageFilter?.creator != value) { |

| objects[_kImageFilterIndex] = value._toNativeImageFilter(); |

| } |

| } |

| } |

| |

| /// Whether the colors of the image are inverted when drawn. |

| /// |

| /// Inverting the colors of an image applies a new color filter that will |

| /// be composed with any user provided color filters. This is primarily |

| /// used for implementing smart invert on iOS. |

| bool get invertColors { |

| return _data.getInt32(_kInvertColorOffset, _kFakeHostEndian) == 1; |

| } |

| set invertColors(bool value) { |

| _data.setInt32(_kInvertColorOffset, value ? 1 : 0, _kFakeHostEndian); |

| } |

| |

| @override |

| String toString() { |

| if (const bool.fromEnvironment('dart.vm.product')) { |

| return super.toString(); |

| } |

| final StringBuffer result = StringBuffer(); |

| String semicolon = ''; |

| result.write('Paint('); |

| if (style == PaintingStyle.stroke) { |

| result.write('$style'); |

| if (strokeWidth != 0.0) { |

| result.write(' ${strokeWidth.toStringAsFixed(1)}'); |

| } else { |

| result.write(' hairline'); |

| } |

| if (strokeCap != StrokeCap.butt) { |

| result.write(' $strokeCap'); |

| } |

| if (strokeJoin == StrokeJoin.miter) { |

| if (strokeMiterLimit != _kStrokeMiterLimitDefault) { |

| result.write(' $strokeJoin up to ${strokeMiterLimit.toStringAsFixed(1)}'); |

| } |

| } else { |

| result.write(' $strokeJoin'); |

| } |

| semicolon = '; '; |

| } |

| if (!isAntiAlias) { |

| result.write('${semicolon}antialias off'); |

| semicolon = '; '; |

| } |

| if (color != const Color(_kColorDefault)) { |

| result.write('$semicolon$color'); |

| semicolon = '; '; |

| } |

| if (blendMode.index != _kBlendModeDefault) { |

| result.write('$semicolon$blendMode'); |

| semicolon = '; '; |

| } |

| if (colorFilter != null) { |

| result.write('${semicolon}colorFilter: $colorFilter'); |

| semicolon = '; '; |

| } |

| if (maskFilter != null) { |

| result.write('${semicolon}maskFilter: $maskFilter'); |

| semicolon = '; '; |

| } |

| if (filterQuality != FilterQuality.none) { |

| result.write('${semicolon}filterQuality: $filterQuality'); |

| semicolon = '; '; |

| } |

| if (shader != null) { |

| result.write('${semicolon}shader: $shader'); |

| semicolon = '; '; |

| } |

| if (imageFilter != null) { |

| result.write('${semicolon}imageFilter: $imageFilter'); |

| semicolon = '; '; |

| } |

| if (invertColors) { |

| result.write('${semicolon}invert: $invertColors'); |

| } |

| result.write(')'); |

| return result.toString(); |

| } |

| } |

| |

| /// The color space describes the colors that are available to an [Image]. |

| /// |

| /// This value can help decide which [ImageByteFormat] to use with |

| /// [Image.toByteData]. Images that are in the [extendedSRGB] color space |

| /// should use something like [ImageByteFormat.rawExtendedRgba128] so that |

| /// colors outside of the sRGB gamut aren't lost. |

| /// |

| /// This is also the result of [Image.colorSpace]. |

| /// |

| /// See also: https://en.wikipedia.org/wiki/Color_space |

| enum ColorSpace { |

| /// The sRGB color space. |

| /// |

| /// You may know this as the standard color space for the web or the color |

| /// space of non-wide-gamut Flutter apps. |

| /// |

| /// See also: https://en.wikipedia.org/wiki/SRGB |

| sRGB, |

| /// A color space that is backwards compatible with sRGB but can represent |

| /// colors outside of that gamut with values outside of [0..1]. In order to |

| /// see the extended values an [ImageByteFormat] like |

| /// [ImageByteFormat.rawExtendedRgba128] must be used. |

| extendedSRGB, |

| } |

| |

| /// The format in which image bytes should be returned when using |

| /// [Image.toByteData]. |

| // We do not expect to add more encoding formats to the ImageByteFormat enum, |

| // considering the binary size of the engine after LTO optimization. You can |

| // use the third-party pure dart image library to encode other formats. |

| // See: https://github.com/flutter/flutter/issues/16635 for more details. |

| enum ImageByteFormat { |

| /// Raw RGBA format. |

| /// |

| /// Unencoded bytes, in RGBA row-primary form with premultiplied alpha, 8 bits per channel. |

| rawRgba, |

| |

| /// Raw straight RGBA format. |

| /// |

| /// Unencoded bytes, in RGBA row-primary form with straight alpha, 8 bits per channel. |

| rawStraightRgba, |

| |

| /// Raw unmodified format. |

| /// |

| /// Unencoded bytes, in the image's existing format. For example, a grayscale |

| /// image may use a single 8-bit channel for each pixel. |

| rawUnmodified, |

| |

| /// Raw extended range RGBA format. |

| /// |

| /// Unencoded bytes, in RGBA row-primary form with straight alpha, 32 bit |

| /// float (IEEE 754 binary32) per channel. |

| /// |

| /// Example usage: |

| /// |

| /// ```dart |

| /// import 'dart:ui' as ui; |

| /// import 'dart:typed_data'; |

| /// |

| /// Future<Map<String, double>> getFirstPixel(ui.Image image) async { |

| /// final ByteData data = |

| /// (await image.toByteData(format: ui.ImageByteFormat.rawExtendedRgba128))!; |

| /// final Float32List floats = Float32List.view(data.buffer); |

| /// return <String, double>{ |

| /// 'r': floats[0], |

| /// 'g': floats[1], |

| /// 'b': floats[2], |

| /// 'a': floats[3], |

| /// }; |

| /// } |

| /// ``` |

| rawExtendedRgba128, |

| |

| /// PNG format. |

| /// |

| /// A loss-less compression format for images. This format is well suited for |

| /// images with hard edges, such as screenshots or sprites, and images with |

| /// text. Transparency is supported. The PNG format supports images up to |

| /// 2,147,483,647 pixels in either dimension, though in practice available |

| /// memory provides a more immediate limitation on maximum image size. |

| /// |

| /// PNG images normally use the `.png` file extension and the `image/png` MIME |

| /// type. |

| /// |

| /// See also: |

| /// |

| /// * <https://en.wikipedia.org/wiki/Portable_Network_Graphics>, the Wikipedia page on PNG. |

| /// * <https://tools.ietf.org/rfc/rfc2083.txt>, the PNG standard. |

| png, |

| } |

| |

| /// The format of pixel data given to [decodeImageFromPixels]. |

| enum PixelFormat { |

| /// Each pixel is 32 bits, with the highest 8 bits encoding red, the next 8 |

| /// bits encoding green, the next 8 bits encoding blue, and the lowest 8 bits |

| /// encoding alpha. Premultiplied alpha is used. |

| rgba8888, |

| |

| /// Each pixel is 32 bits, with the highest 8 bits encoding blue, the next 8 |

| /// bits encoding green, the next 8 bits encoding red, and the lowest 8 bits |

| /// encoding alpha. Premultiplied alpha is used. |

| bgra8888, |

| |

| /// Each pixel is 128 bits, where each color component is a 32 bit float that |

| /// is normalized across the sRGB gamut. The first float is the red |

| /// component, followed by: green, blue and alpha. Premultiplied alpha isn't |

| /// used, matching [ImageByteFormat.rawExtendedRgba128]. |

| rgbaFloat32, |

| } |

| |

| /// Signature for [Image] lifecycle events. |

| typedef ImageEventCallback = void Function(Image image); |

| |

| /// Opaque handle to raw decoded image data (pixels). |

| /// |

| /// To obtain an [Image] object, use the [ImageDescriptor] API. |

| /// |

| /// To draw an [Image], use one of the methods on the [Canvas] class, such as |

| /// [Canvas.drawImage]. |

| /// |

| /// A class or method that receives an image object must call [dispose] on the |

| /// handle when it is no longer needed. To create a shareable reference to the |

| /// underlying image, call [clone]. The method or object that receives |

| /// the new instance will then be responsible for disposing it, and the |

| /// underlying image itself will be disposed when all outstanding handles are |

| /// disposed. |

| /// |

| /// If `dart:ui` passes an `Image` object and the recipient wishes to share |

| /// that handle with other callers, [clone] must be called _before_ [dispose]. |

| /// A handle that has been disposed cannot create new handles anymore. |

| /// |

| /// See also: |

| /// |

| /// * [Image](https://api.flutter.dev/flutter/widgets/Image-class.html), the class in the [widgets] library. |

| /// * [ImageDescriptor], which allows reading information about the image and |

| /// creating a codec to decode it. |

| /// * [instantiateImageCodec], a utility method that wraps [ImageDescriptor]. |

| class Image { |

| Image._(this._image, this.width, this.height) { |

| assert(() { |

| _debugStack = StackTrace.current; |

| return true; |

| }()); |

| _image._handles.add(this); |

| onCreate?.call(this); |

| } |

| |

| // C++ unit tests access this. |

| @pragma('vm:entry-point') |

| final _Image _image; |

| |

| /// A callback that is invoked to report an image creation. |

| /// |

| /// It's preferred to use [MemoryAllocations] in flutter/foundation.dart |

| /// than to use [onCreate] directly because [MemoryAllocations] |

| /// allows multiple callbacks. |

| static ImageEventCallback? onCreate; |

| |

| /// A callback that is invoked to report the image disposal. |

| /// |

| /// It's preferred to use [MemoryAllocations] in flutter/foundation.dart |

| /// than to use [onDispose] directly because [MemoryAllocations] |

| /// allows multiple callbacks. |

| static ImageEventCallback? onDispose; |

| |

| StackTrace? _debugStack; |

| |

| /// The number of image pixels along the image's horizontal axis. |

| final int width; |

| |

| /// The number of image pixels along the image's vertical axis. |

| final int height; |

| |

| bool _disposed = false; |

| /// Release this handle's claim on the underlying Image. This handle is no |

| /// longer usable after this method is called. |

| /// |

| /// Once all outstanding handles have been disposed, the underlying image will |

| /// be disposed as well. |

| /// |

| /// In debug mode, [debugGetOpenHandleStackTraces] will return a list of |

| /// [StackTrace] objects from all open handles' creation points. This is |

| /// useful when trying to determine what parts of the program are keeping an |

| /// image resident in memory. |

| void dispose() { |

| onDispose?.call(this); |

| assert(!_disposed && !_image._disposed); |

| assert(_image._handles.contains(this)); |

| _disposed = true; |

| final bool removed = _image._handles.remove(this); |

| assert(removed); |

| if (_image._handles.isEmpty) { |

| _image.dispose(); |

| } |

| } |

| |

| /// Whether this reference to the underlying image is [dispose]d. |

| /// |

| /// This only returns a valid value if asserts are enabled, and must not be |

| /// used otherwise. |

| bool get debugDisposed { |

| bool? disposed; |

| assert(() { |

| disposed = _disposed; |

| return true; |

| }()); |

| return disposed ?? (throw StateError('Image.debugDisposed is only available when asserts are enabled.')); |

| } |

| |

| /// Converts the [Image] object into a byte array. |

| /// |